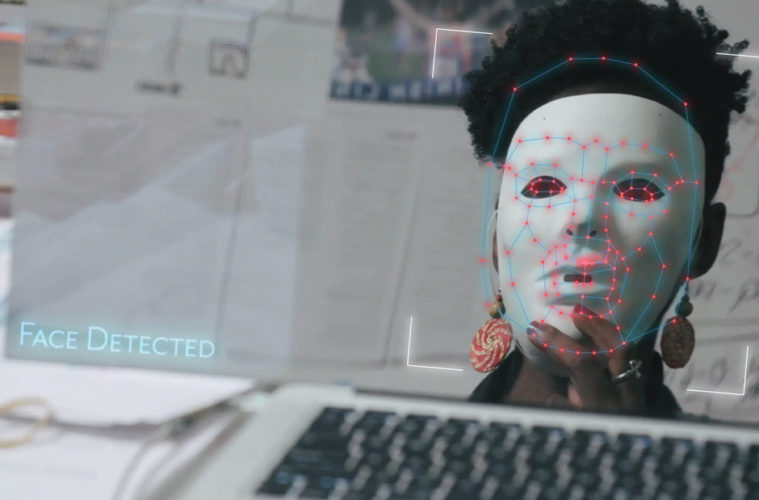

Starting with the work of Joy Buolamwini of the MIT Media Lab, Shalini Kantayya’s Coded Bias is an alarming look at the imperfections of technology trusted to make our lives easier and safer. A false bill of goods has been sold in certain circumstances, ranging from a 90% false-positive rate in facial recognition to relying on algorithms based on zip code that can determine sentencing, recidivism rates, and probation guidelines in the criminal justice system. As Jeff Orlowski’s The Social Dilemma also highlights, part of the problem lies with who is making these decisions, and it’s mostly white male computer programmers.

Director Kantayya masterfully takes material that in other hands might become dry and academic and turns it, thanks in part of the engaging personality of lead subject Buolamwini, into something that’s actually quite cinematic, highlighting the advocacy in the U.S. and China, places leading the development of AI, machine learning, and scoring systems. The difference, as the film points out, is China is actually a bit more transparent, using a draconian social credit score as social policy, while Apple, Google, Amazon, Facebook, and others are developing these tools for private use and commerce in a black box.

Coded Bias uses Buolamwini’s unique and inspiring perspective (we learn as a girl she dreamed of one day working at the MIT Media Lab without knowing what exactly she wanted to work on) to open a larger conversation about the use of flawed biometric drama on a global and local scale, from the streets of London to affordable housing in Brooklyn. Buolamwini finds a kindred spirit in Cathy O’Neil, the data scientist and author of “Weapons of Math Destruction,” who proposes an FDA for algorithms while Buolamwini calls attention to these issues through the projects of her Algorithmic Justice League.

The problem the film unpacks in both personal and global terms is that algorithms can be inherently and unintentionally bias not just due to the blind spots of those working on these problems (a profession dominated by Caucasian male programmers of a certain age) but due to the factors the data isn’t examining. Prison entry programs can assign a risk score to offenders based upon zip code, privileging demographic data over education level and work history. Often these solutions are sold with NDAs that keep the code away from auditors and researchers assessing the viability of what is sold as a magic product free from human bias.

Playing with science-fiction tropes, Coded Bias is an eye-opening and important film that calls attention to a movement of resistance led by badass female data scientists and grassroots organizations examining an important civil liberties matter. The film and its speakers point out these technologies inherently target the poor first as London police stop and frisk individuals based on a false positive rather than actual evidence. They’re aware the technology is imperfect but continue to do so with a bias code giving way to probable cause. Coded Bias is bound to provoke conversation and hopefully policy changes around data collection and retention as well as recognition at all levels that algorithms can be easily weaponized against a population without extensive audit and oversight capabilities.

Coded Bias was set to screen at SXSW.